Machine Art: Stable Diffusion (on Apple Silicon)

Testing Stable Diffusion on Apple Silicon.

Background

Stable Diffusion is a text-to-image diffusion model that can create an AI image from a given prompt. I wanted to test it on my M1 Macbook.

Setup

Setup was fairly simple. I followed the instructions on Lincoln Stein’s fork of Stable Diffusion for M1 Macs, and despite all the disclaimers, the process worked like a charm. There is rapid development across Stable Diffusion and pytorch nightly, so my experience could be attributed to lucky timing, YMMV.

I do experience some of the bugs already reported as issues – occasionally my generated output is an black square.

Getting any output image was slow since I couldn’t take advantage of CUDA cores, and was running everything off CPU. I am lucky enough to have a friend with a beefy Nvidia GPU though – I’ve attached some of the interesting images generated at the end.

Images

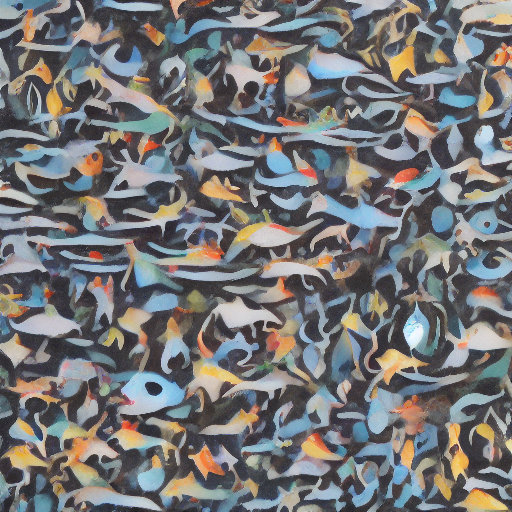

“ocean”. First photo was created with only one pass.

“game of thrones anime”

“thanos eating popcorn”

“dog king”

“cat in armor high def”

I also tried out the img2img command, turning the last photo into a “drawing”.

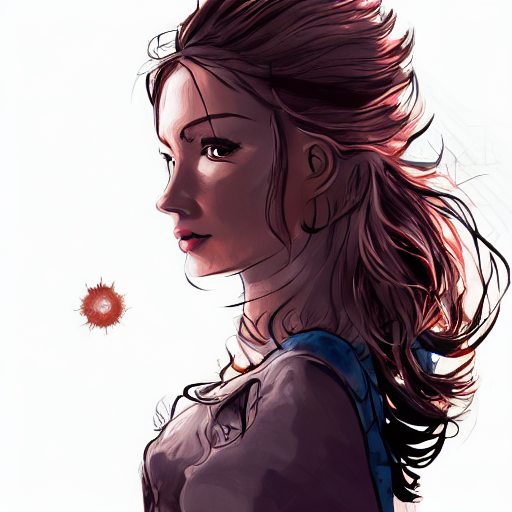

And, a profile photo for myself.

More Images

These photos were generated with my friend’s desktop – he has an AMD 5950x, RTX 3080 FE. Super fast – much easier to iterate on good prompts and search for better seeds.

The following is the original generated from txt2img, the rest are

alternatives/improvements using img2img.

![]()